Commitment to Quality

His eyes sparkle when he talks about his job in Testing, eagerly and yet with methodical earnestness. Aron is just as at home on a DJ mixer in live performances as he is at the computer keyboard, and he is well aware of the value of his team, aptly named Digital Experience. To describe his world, he begins at step 1. With definitions.

QA vs. Testing

While testing and quality assurance (QA) are often used interchangeably, QA is about creating and sustaining a reliable system for management of high-quality output, while testing is to do with the details of finding bugs and fixing code. The purpose of testing is to guarantee that the code works as intended and satisfies requirements.

To summarize – quality assurance is the overarching concept, in which tests are used as its supporting pillars. The focus of Open Systems is on end-to-end quality assurance of the entire SASE technology stack, even when changes are made to the code during update or patch cycles.

QA for Open Systems Customer Portal

The engineers in the Digital Experience team in charge of testing new portal features carry a substantial amount of responsibility at Open Systems. It comes with the territory. Because everything needs testing, and they would much rather empower their colleagues from network and security teams than be a magnificent bottleneck.

A seamless experience for users and developers alike is driven by end-to-end quality assurance, which for Open Systems is a priority, considering the SASE service it delivers is principally about end-to-end operations, and not about a hodge-podge of point solutions or single products. Using the services they built, the developers participate in operations rotation, spending an average of 20% of their time working on customer support calls in Mission Control. That way, the engineers experience their code first-hand, receive real-life feedback, and are motivated to provide solutions to real-world problems.

Seamless.

It sounds good and folks in IT hear it often. But what does it actually mean? The Cambridge dictionary defines seamless as “happening without any sudden changes, interruption, or difficulty.” It smacks of continuity, consistency, and accessibility. In other words, a seamless experience will be intuitive and satisfying.

Seamless: Happening without any sudden changes, interruption, or difficulty.

Let’s take the customer portal as an example of user interface (UI) testing.

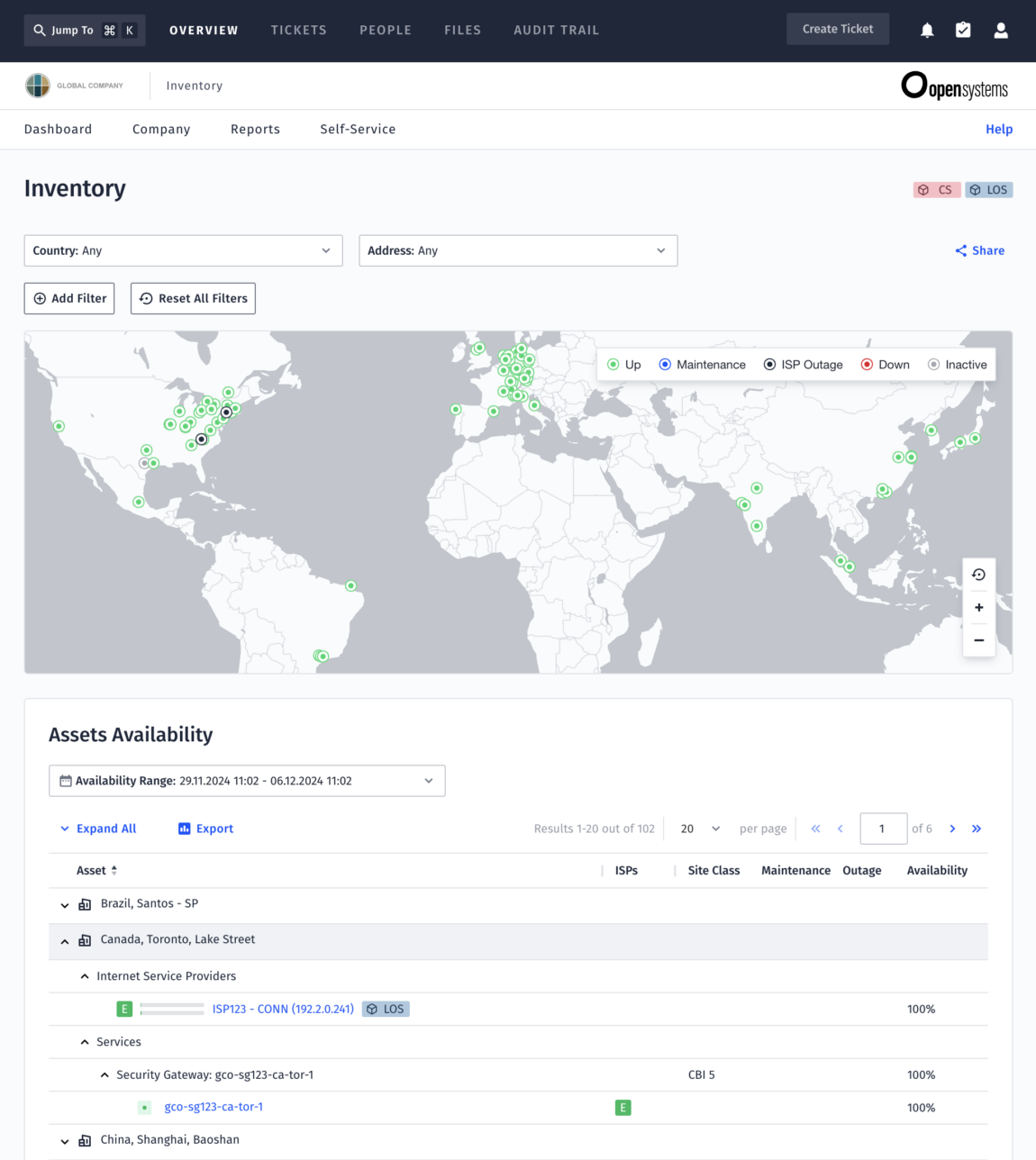

Figure 1: Inventory page in the customer portal.

Being the main interface for customers, the portal is also known as a single pane of glass. Its purpose is to get visibility over networks and applications iand to enable precise communication with the 24x7 Operations Center. The portal contains reports and tools to support, implement and manage global IT security and availability – a ticketing system; statistics about application, network, and device performance; log viewing; configuration details and overviews, and self-service functionality. The greatest challenge for the Digital Experience team is to ensure the quality of tests end to end while maintaining speed in innovation and making the entire process of launching new portal features scalable.

Testing 101

Testing is fiddly, not only due to the sheer number of tests, but needing to keep track of the results for each, as well as their interdependencies, and remembering what you already tested, and what still requires testing. Then there is the reporting that other teams must see so they can remain mindful and keep track of their stuff.

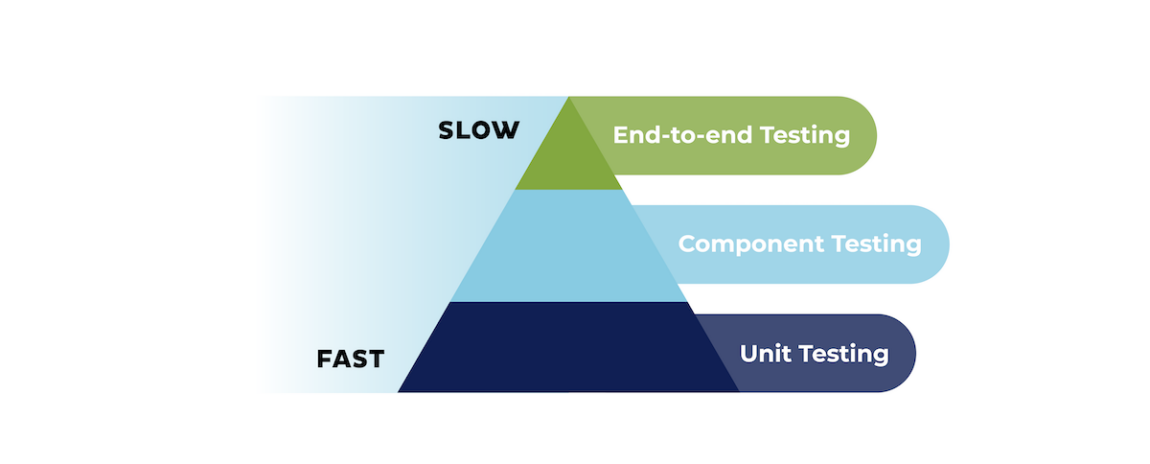

There needs to be structure for quality assurance to reach efficiency and effectiveness. We can consider it as a pyramid whose base is unit tests – many and swift. This is followed by fewer, but more complicated, component tests, whereas at the tip of the pyramid, the end-to-end (E2E) tests are the fewest and most challenging, also requiring more processing power.

Figure 2: Testing pyramid, representing the relative number and speed of test types

Testing is not an option

Before we begin with the how, let’s underline the why.

Testing is not an option; it is a necessity.

Engineers aim for maintainable, extendable code that is easy and safe to refactor[1].

That way, they avoid production issues and have more fun and safety when making code changes.

Sustaining a testing culture means designing software so you can write tests easily. It boils down to being aware that huge functions are hard to test, so it makes sense to break them up into smaller, more manageable functions.

This approach is known as continuous integration (CI) where incremental, frequent changes are routinely integrated into the complete codebase, straight testing and validating those changes.

Portal parts for testing

In the customer portal, the most basic (atomic) part of the web page, such as a link or an icon, would be included in a unit .

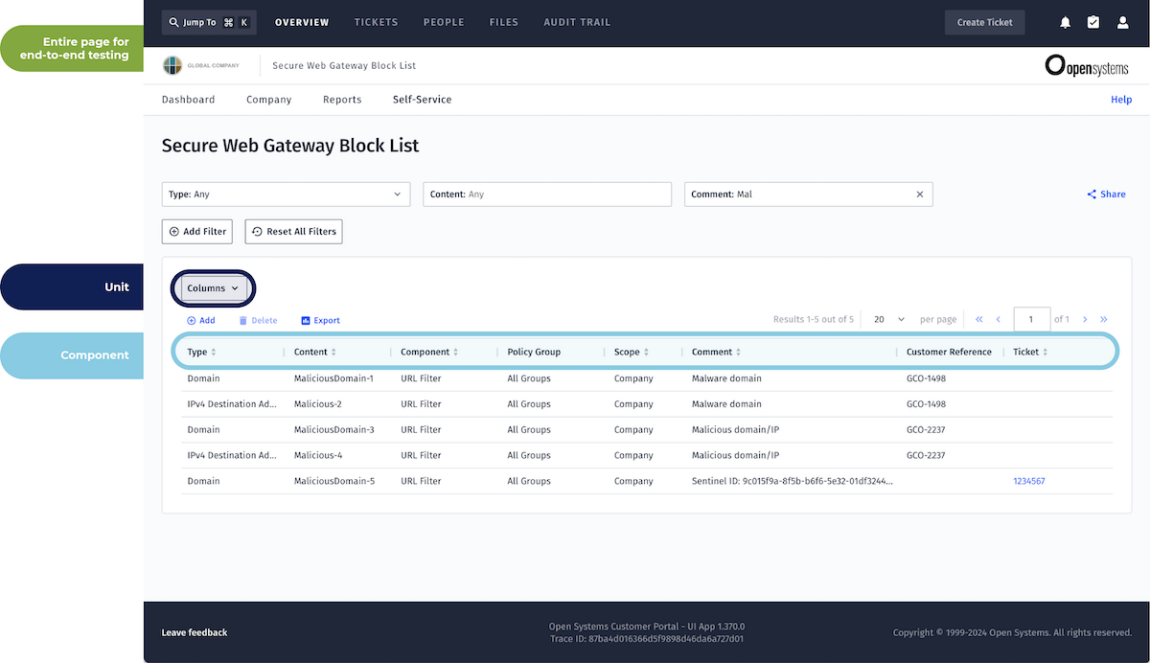

Figure 3: How parts of a customer portal page relate to testing.

A component is a conglomerate of units, but still a small part of a web page, for example a list entry showing a service name, location and status of the deployed service delivery platform. This is a candidate for a component test.

The entire web page including its URL would typically be part of an end-to-end test.

How testing is done

The pyramid of unit tests, component tests, and E2E tests is used by engineers throughout Open Systems. Additionally, the installation of releases and patches depends on customers’ operations environments. They can choose to be early adopters and provide real-life user feedback that contributes to developing the service capabilities. Or they can go with the general availability (GA) release; or they can be late adopters for when there are critical systems to maintain.

Unit Tests – run always

Unit tests are about verifying isolated functionality or methods. In other words, the engineers focus on individual components and test them without relying on the rest of the system.

A unit test covers specifically a unit of code, meaning that it must be independent of other units of the code (or services). Unlike other tests which can cover multiple units. So it is testing if that unit works as desired, rather than how a unit works in collaboration with other units. It could be that code passes its unit tests because it does the "right thing" at the micro level, but at a macro level it might not be what is needed.

F.I.R.S.T principles

The main principles of unit testing are F.I.R.S.T. or more specifically:

- Fast: Everything about a unit test needs to be swift because there can be thousands of them in a project.

- Isolated or independent: A unit test must be self-contained and not reliant on other tests. It must follow the principle of Arrange, Act, Assert.

- Arrange: Prepare the data used in the test to be part of the test. It describes the preconditions before the test begins.

- Act: Run the test.

- Assert: Declare the expected outcome.

- Repeatable: No matter how many times or where you run the test, its result must always be the same.

- Self-validating: A test must produce a “yes” or “no” answer, never a “maybe.”

- Timely and thorough: Typically, a developer writes the code and its test close together in time, while still being fully mindful of its intricacies. The code must be tested very soon after being created. And the test needs to cover every use case scenario, including edge cases, which also give insight to the boundaries of user experience.

Example

In a simple example, suppose we are in a truck-loading business and want to test a function that adds crates to a truck.

| Function AddCrate | Arrange

data for the test |

Act | Assert

expected value |

|

A + B = C |

Input A = 1 Input B = 2 |

AddCrate (1 + 2) |

C = 3

|

We also need to test for the edge cases. What if A = 0 and B = –2?

Edge cases are interesting, because they can point to potential issues on the horizon of the future.

A word about Regression Testing

To further protect customers from unnecessary service interruptions, the Digital Experience team puts emphasis on regression testing. . In a nutshell, regression testing ensures that new code changes don’t adversely affect existing functionality.

A tool called Jest is particularly useful at Open Systems due to its speed and ease of setup. These days, visual regression tests are possible and useful for the visual aspects of the customer portal, to be confident that users continue getting quality experience and that the UX team doesn’t get alienated.

The purpose of Jest is to validate complex functions that contain pure logic. Here are a few guidelines the engineers at Open Systems use for writing such tests:

- Write Jest tests for various scenarios you encounter.

- Aim for a regression testing style to continually validate your code during development.

- Prioritize writing Jest tests whenever possible as they are fast and require minimal overhead.

- Prioritize extracting logic to dedicated functions that can be tested this way.

Component Tests – run often

Component isolation and component testing for the customer portal is done with Cypress at Open Systems, including screenshot comparison. This makes it possible for the engineers to test components in isolation, ensuring they (the components) behave correctly with different props and states. In this type of testing, it is particularly important not to interact with code outside the component, which is why it is necessary to create “fake objects” such as mocked up props and states.

Isolating component tests with Cypress

The purpose of Cypress is to ensure that components and their variants are testable and function correctly. Open Systems engineers use the following guidelines:

- Use Cypress component testing for isolated components.

- Build components with props to facilitate easy manipulation.

- Aim for stateless components by avoiding the use of hooks and contexts.

- Keep fetching logic outside the component; process only props within the component.

- Use screenshots to validate design sparingly; leverage other Cypress assertions that are less CI-intensive.

- Threshold settings: Global threshold settings usually suffice but might need reviewing when changing screenshots.

E2E Tests – run selectively

End-to-end tests simulate real user interactions, providing confidence that the application works as expected in a real-world scenario. They are relevant for testing outside elements such as URL parsing, date simulation, link assertion, and navigation. However, due to their complexity and running time, they should be used sparingly. Hence the focus on the nitty-gritty testing being done at the unit and component levels before the end-to-end tests.

The engineering guidelines for end-to-end tests at Open Systems are:

- Limit E2E tests to one per page to minimize overhead, focusing on higher-level interactions.

- Use Cypress E2E testing to visit a page and test specific behaviors such as loading, component existence, URL validation, and element interactions.

- Validate that the view works correctly after data fetching and manipulation.

- Source data from TypeScript fixtures to account for frequent data structure changes.

- Mockup global data only if used on every page; otherwise, mockup page-specific data.

- When testing different variations of a specific view, classify the variants into distinct test types, such as component or unit testing, to ensure the scalability of the test suite.

Breaking tests to save production

The main concept behind ensuring quality is to write software for which you can write tests easily. It is worthwhile getting the basics right, in terms of logic flow, costs, and overall user experience, which means making unit testing a priority. Scalability benefits from this type of “molecular concept” that encourages carving out as many stateless components as possible to be sure everything is tested at an individual level, reducing the need for large masses of costly end-to-end tests.

In the same way that every business environment is unique, so is every development environment. That unique balance also applies to quality assurance, where the testing setup needs to be based on the maturity of the code in question and on development speed.

At Open Systems, engineers try to break the tests. Because breaking the tests saves production. And the engineers would much rather have tests failing than production failing.

[1] refactoring = to restructure code, with the aim of improving it by making many small changes, while not changing its original, intended functionality.

Leave Complexity

Behind

To learn how Open Systems SASE Experience can benefit your organization, talk to a specialist today.

Contact Us