Why Tight Integration Is the True Core Component of SASE

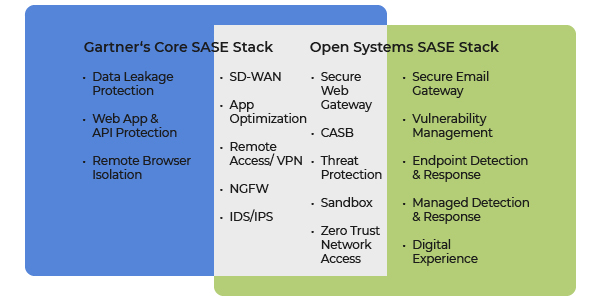

Most IT professionals have by now heard of secure access service edge (SASE), an emerging category of products and services that converges SD-WAN with comprehensive network security functions. In its August 2019 The Future Network Security Is in the Cloud report, Gartner recommends a list of core technologies that any SASE solution should incorporate. It’s a fairly long list, comprising mainstream technologies like SD-WAN and Secure Web Gateway (SWG) as well as more niche technologies like Software Defined Perimeter (SDP) and Remote Browser Isolation (RBI).

However, Open Systems already offers the majority of these core SASE technologies as a service for cloud and on-premises environments. More important, Open Systems’ SASE fulfills another key definition of SASE by Gartner: it should provide an integrated network and security services that can be “orchestrated as a single experience from a single console and a single method for setting policy.” It’s this integration, more specifically, “tight integration,” that’s the real key to SASE, and it’s where Open Systems excels.

Much goes into tight SASE integration, but let’s start parsing it by breaking down the complete list below of the network and security technologies Gartner says should be integrated into a core SASE stack.

In addition to these components currently specified by Gartner, the Open Systems platform also offers Secure Email Gateway (SEG) as well as Managed Detection and Response (MDR) services.

Of course, a well-architected SASE solution comprises more than just a stack of networking and security components. Why would you want to recreate the sprawl of security devices that now exist in your data centers in the cloud? You’ll still have to manage multiple devices from multiple consoles, plus all the associated complexity. User experience may not markedly improve given the fact that their traffic still has to go through an array of service chained devices. Point is these “stitched together” point solutions — whether hosted in the cloud or not — are not SASE. Rather, for SASE services to improve user experience and reduce management complexity across services you must have tight integration.

What does achieving tighter integration for better performance entail? Well, it may mean reducing the number of service chains: e.g., between SWG and CASB services; between the DPI engine and the firewall; and so on. It may also mean the greater use of “single pass” executions in which packets are decrypted and re-encrypted just once. In other words, all the necessary classification and policy enforcement actions across multiple services—from NGFW to SD-WAN to SWG—are performed without having to decrypt and re-encrypt at each service, thereby minimizing latency.

Why is tight integration so important?

The main reason we need tighter integration is to achieve consistent visibility and policy enforcement across services. Only when we have common visibility using consistent terms and contexts, can we be in a position to assess what is happening in the environment; figure out what to do; implement consistent policies; troubleshoot problems faster and more accurately; etc.

First and foremost, we need a unified “single-pane” UI from which to manage all of the services – which is easier said than done. Many vendors do not have a single management station because many of their point solutions probably came from different sources. Of course, tighter integration is more than just the single pane UI. It also means using the same terms and values across multiple services. For example, the User ID ABC on the VPN gateway should be the same User ID ABC on the firewall. Or, app ID XYZ should be the same on the L7 firewall and the path selection algorithm. It could also mean checking to see if policies across multiple services are in conflict with one another. For instance, you don’t want to have the same URL the web proxy bypass list and in the web proxy blacklist.

Obviously, I’m simplifying here. Tighter integration across services is easier said than done, but it must be done. Because without a common management platform, it is almost impossible to provide a high level of service.

Conclusion: Only tight integration provides the true benefits of the SASE, and only deep experience can deliver it

In sum, the primary benefit of a tightly integrated SASE component stack is consistent visibility and policy enforcement across multiple services. Deploying this type of solution results in more accurate and more effective security policies, fewer conflicting policies, fewer errors, fewer outages, simpler troubleshooting, and more. Second, tighter integration should result in improved overall performance due to fewer service chains and greater use of single-pass executions.

Of course, these benefits are obvious to anyone who has managed multiple point solutions. Open Systems has a somewhat deeper appreciation for the value of tight integration. This understanding stems from 20+ years of experience in integrating various types of software packages (in-house, open source, and OEM), and from operating services for highly demanding customers around the globe. In fact, long before there was a term “SASE” we offered many of the core components as a service.

Open Systems also aligns service development with service operations according to a DevOps model. Developers staff our Mission Control NOC and SOC, and we make it a point to integrate open source and third-party packages into the Open Systems platform as seamlessly as possible. The initial upfront investment required for this pays off many times over in simpler, less error-prone operations.

To recap, if you’re looking to simplify your network and security operations, be sure to ask potential SASE providers: (1) if they offer your key networking and security functions as a service; and (2) how tightly they have integrated those services.

In the next blog, I will discuss the role of secure, performant cloud delivery in SASE offerings.